Metrics Quickstart

10 minute read

This guide helps you quickly implement the minimum set of metrics needed to measure and improve your Continuous Delivery performance. Start tracking today, improve tomorrow.

Why Metrics Matter

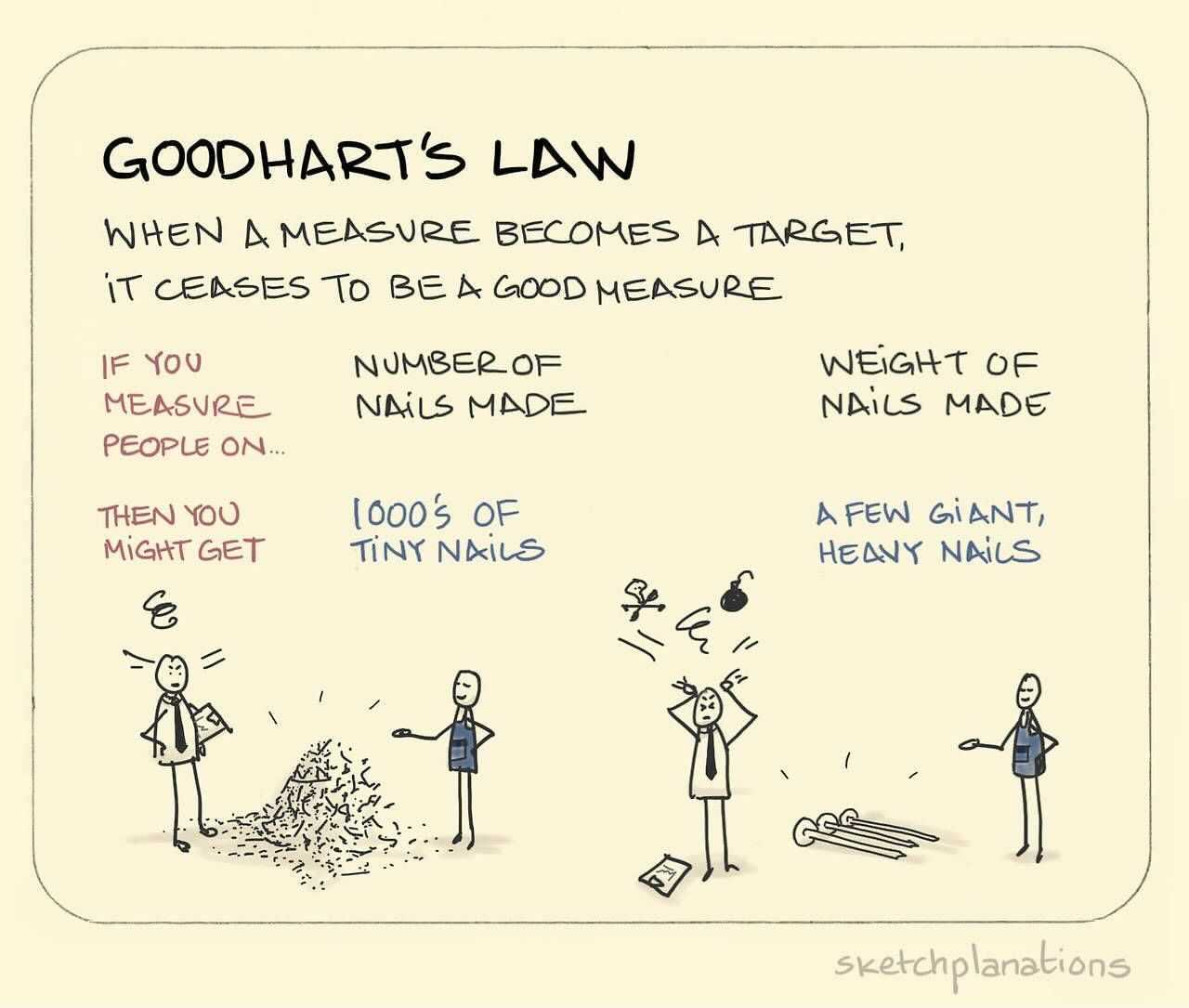

Goodhart's Law

Without metrics, improvement is guesswork.

Metrics help you:

- ✅ Identify bottlenecks in your delivery process

- ✅ Measure improvement over time

- ✅ Make data-driven decisions about where to focus

- ✅ Demonstrate value to leadership

- ✅ Prevent regression when optimizing

Critical

Use metrics in groups, never alone. Optimizing a single metric leads to unintended consequences. Always use offsetting metrics to maintain balance.The Essential Four Metrics

Start with these four DORA metrics that predict delivery performance:

| Metric | Good Target | Purpose |

|---|---|---|

| Development Cycle Time | < 2 days | Measure delivery speed |

| Deployment Frequency | Multiple/day | Measure delivery throughput |

| Change Failure Rate | < 5% | Measure quality |

| Mean Time to Repair | < 1 hour | Measure recovery speed |

These four metrics balance speed (cycle time, deployment frequency) with stability (change failure rate, MTTR).

Day 1: Start Tracking

Step 1: Deployment Frequency (15 minutes)

What it measures: How often you deploy to production

Simplest implementation:

Query deployment frequency:

Step 2: Development Cycle Time (20 minutes)

What it measures: Time from starting work to deploying to production

Track using git commits + deployment log:

Alternative: Track in your issue tracker

Most teams find it easier to track cycle time in Jira/GitHub Issues:

See Development Cycle Time for detailed implementation.

Step 3: Change Failure Rate (10 minutes)

What it measures: Percentage of deployments that require remediation

Simple tracking:

What counts as a failure:

- Deployment rollback

- Hotfix deployed within 24 hours

- Production incident caused by the change

- Manual intervention required

See Change Failure Rate for detailed guidance.

Step 4: Mean Time to Repair (10 minutes)

What it measures: How long it takes to restore service after an incident

Simple incident tracking:

Calculate MTTR:

Better: Integrate with incident management

See Mean Time to Repair for recovery strategies.

End of Day 1: You’re Tracking!

After one day, you should have:

- ✅ Deployment frequency logging

- ✅ Cycle time calculation (even if manual)

- ✅ Change failure tracking

- ✅ MTTR measurement

Calculate your baseline:

Week 1: Make Metrics Visible

Create a Metrics Dashboard

Option 1: Simple Spreadsheet (30 minutes)

Create a Google Sheet or Excel file:

| Week | Deployments | Avg Cycle Time (days) | Change Failures | MTTR (min) |

|---|---|---|---|---|

| 2025-W42 | 23 | 3.2 | 8.7% | 127 |

| 2025-W43 | 31 | 2.8 | 6.5% | 89 |

| 2025-W44 | 28 | 2.5 | 4.3% | 62 |

Update weekly and display prominently (print and post, or share link).

Option 2: Grafana Dashboard (2 hours)

Push metrics to Prometheus:

Option 3: Cloud Service (1 hour)

Use a hosted metrics service:

- Datadog - Application monitoring

- New Relic - Full-stack observability

- CloudWatch - AWS native

- Azure Monitor - Azure native

- Google Cloud Monitoring - GCP native

Example with Datadog:

Week 2: Add Supporting Metrics

Once the four essentials are stable, add these supporting metrics:

Integration Frequency

What it measures: How often code is integrated to trunk

Target: Multiple times per day per developer

Build Duration

What it measures: Time from commit to deployable artifact

Target: < 10 minutes

See Build Duration.

Work In Progress (WIP)

What it measures: Number of items in progress simultaneously

Track from your kanban board:

Target: WIP < Team Size (ideally half)

See Work In Progress.

Metric Groups: Use Together

Goodhart's Law

“When a measure becomes a target, it ceases to be a good measure.”Never optimize a single metric. Use offsetting groups:

Speed vs. Quality Group

| Speed Metrics | Quality Metrics |

|---|---|

| Deployment Frequency ⬆️ | Change Failure Rate ⬇️ |

| Cycle Time ⬇️ | Defect Rate ⬇️ |

Example: If you increase deployment frequency but change failure rate also increases, you’re going too fast. Slow down and improve quality feedback.

Flow vs. WIP Group

| Flow Metrics | Constraint Metrics |

|---|---|

| Throughput ⬆️ | WIP ⬇️ |

| Velocity ⬆️ | Lead Time ⬇️ |

Example: High velocity with high WIP means you’re starting too much work. Reduce WIP to improve flow.

See Metrics Cheat Sheet for comprehensive metric relationships.

Analyzing Your Metrics

Week 1: Establish Baseline

Don’t change anything. Just measure.

Week 1 Baseline:

- Deployment Frequency: 5/week

- Cycle Time: 4.2 days

- Change Failure Rate: 12%

- MTTR: 180 minutes

Week 2-4: Identify Bottlenecks

Look for patterns:

High Cycle Time + Low Deployment Frequency = Batch Size Problem

- Solution: Story Slicing

High Change Failure Rate = Quality Feedback Problem

- Solution: Improve Testing

High MTTR = Recovery Process Problem

- Solution: Automate rollback, improve monitoring

High WIP + Low Velocity = Context Switching Problem

- Solution: Limit WIP

Month 2+: Continuous Improvement

Set improvement targets:

Month 1 Target (achievable):

- Deployment Frequency: 10/week (+100%)

- Cycle Time: 3.0 days (-30%)

- Change Failure Rate: 8% (-33%)

- MTTR: 120 minutes (-33%)

Month 3 Target (stretch):

- Deployment Frequency: Daily

- Cycle Time: < 2 days

- Change Failure Rate: < 5%

- MTTR: < 60 minutes

Common Pitfalls

❌ Pitfall 1: Gaming the Metrics

Example: Moving tickets to “Done” before actually deploying

Solution:

- Definition of Done must include “deployed to production”

- Automate metric collection from deployment logs, not issue tracker

❌ Pitfall 2: Vanity Metrics

Example: “We deployed 100 times this month!” (but 20 were rollbacks)

Solution:

- Always pair speed metrics with quality metrics

- Track both successes and failures

❌ Pitfall 3: Metric Theater

Example: Spending more time tracking metrics than improving

Solution:

- Automate data collection

- Review metrics weekly, not daily

- Focus on trends, not point-in-time values

❌ Pitfall 4: Using Metrics as Punishment

Example: “Bob’s change had a failure, Bob is a bad developer”

Solution:

- Metrics measure the system, not individuals

- Use metrics to identify process problems, not blame people

- Celebrate improvements, not perfection

Advanced Metrics (Month 2+)

Once the essentials are stable, consider:

Quality Metrics

- Code Coverage - But don’t target 100%

- Defect Rate - Production bugs per release

- Code Inventory - Undeployed code

Flow Metrics

- Lead Time - Idea to production

- Velocity - Work completed per sprint

- Average Build Downtime - CI availability

Metric Automation Tools

Open Source

- Grafana + Prometheus - Visualization + metrics

- Loki - Log aggregation

- Elasticsearch + Kibana - Search and analytics

- Graphite - Time series database

Commercial

- Datadog - Full-stack observability

- New Relic - Application performance

- Splunk - Log analysis and metrics

- LinearB - Engineering metrics platform

- Sleuth - Deployment tracking

CI/CD Native

- GitHub Insights - Built into GitHub

- GitLab Analytics - Built into GitLab

- Azure DevOps Analytics - Built into Azure DevOps

- CircleCI Insights - Built into CircleCI

Sample Implementation: Complete Script

Here’s a complete bash script to get started:

Set up daily collection:

Success Criteria

After implementing metrics, you should have:

✅ All four DORA metrics tracked automatically ✅ Baseline established (1 week of data) ✅ Dashboard visible to the entire team ✅ Weekly review scheduled ✅ Improvement targets defined ✅ Metric groups balanced (speed + quality)

Next Steps

- Automate collection - Stop manual tracking

- Add visualizations - Trend lines, not just numbers

- Set up alerts - Get notified when metrics degrade

- Correlate metrics - Find relationships between metrics

- Share widely - Make metrics visible to stakeholders

Further Reading

- Metrics Overview - Complete metrics catalog

- Metrics Cheat Sheet - Quick reference guide

- Development Cycle Time - Detailed implementation

- Change Fail Rate - Measuring quality

- Quality Metrics - Comprehensive quality measures

- Accelerate Book - Research behind DORA metrics